DevSecOps - Advanced

1. DevOps vs DevSecOps

What you need to know

The "Security" Silo

- As defined earlier in this roadmap, "DevOps" is a set of Practices, Tools, and Culture that makes software delivery fast, efficient, and reliable. In other words, the goal of "DevOps" is to make software delivery faster and more efficient without compromising reliability, which includes many things like functionality (new code shouldn't break existing functionality), performance, and most importantly, "Security".

- As "DevOps" adoption was rising, its tools and processes were also getting more mature and able to cover many aspects of reliability. For example, to ensure that new code doesn't break existing functionality, CI/CD tools allow us to run unit or integration tests as part of the pipeline, and we could even use metrics such as code coverage as an indicator for test quality.

- However, one aspect of reliability that didn't mature at the same pace was "Security". In other words, as DevOps broke the silo between "Dev" and "Ops", "Security" teams remained in their own silo.

Why "Security" falls behind?

- There are many challenges making it hard for "Security" to be included within the "DevOps" tools and processes. Let's start by exploring some of these challenges

- Human Nature: When building things, our brains focus on “How things should work” (the happy path), not “What could go wrong”, and hence, Security is usually overlooked.

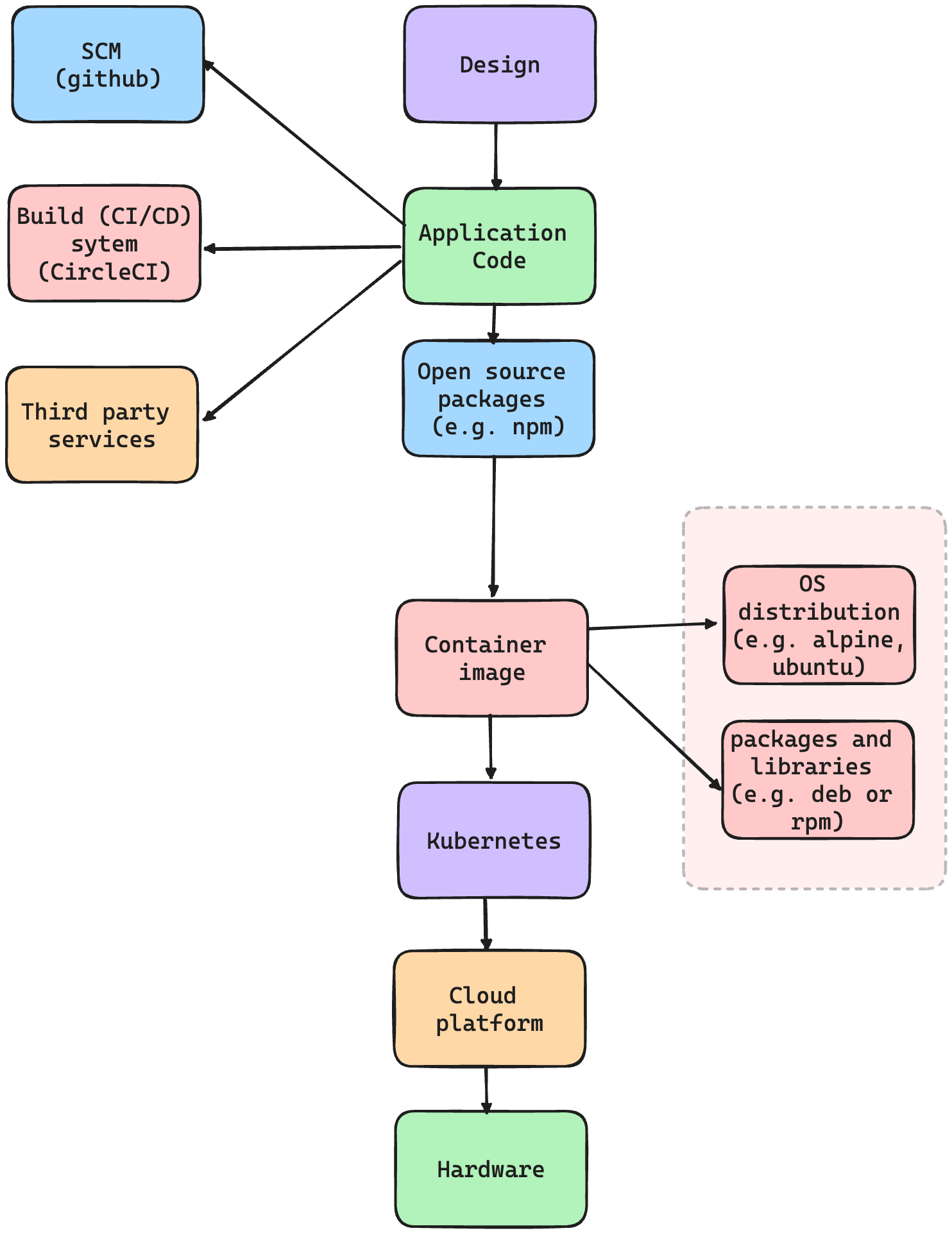

- Nature of Software: Software is built on many layers, abstractions, and dependencies. Security issues can be caused by one of these underlying components (e.g., open-source dependencies, cloud configuration, or even the CI/CD pipeline itself). Hence, to secure an application, we need to unpack all of these layers and abstractions and ensure they are properly configured.

- Nature of security issues:

- Security issues are rarer than other types of bugs (e.g., stability or performance), but are usually more impactful.

- Security issues could exist for years without detection, and when discovered, the person fixing the problem is usually not the same person who introduced it.

- This dilutes the normal “trial and error” learning process, which makes learning much slower.

- Nature of security tools:

- Until recently, many security tools were built focusing on manual usage rather than automation. This makes it harder to include in a CI/CD pipeline.

- Most security tools produce a lot of false positives, making it hard to use for automating decisions (e.g., failing a build pipeline) without a significant amount of tuning.

- Most security tools don't cover many of the business logic security issues (e.g., authorization), leading to many false negatives if we solely rely on them.

Adding "Sec" to "DevOps"

That is why "DevSecOps" was needed to focus on overcoming the above challenges and include "Security" within the "DevOps" tools and processes. In other words, the goal of "DevSecOps" is to integrate "Security" into all the stages of the SDLC (Software Development Lifecycle) without compromising speed and efficiency (which are the main goals of DevOps).

2. Defining: Identifying the Threats

What you need to know

- To be able to verify an application is "Secure" we need to first define what "Secure", means for this application. This is one of the hardest challenges of "Security", as we can't say something is "Secure" without clarifying what it is "Secure" from.

- In other words, to define "Security" we need to identify the main security threats that could affect the application, and identifying the security controls that need to be implemented to mitigate these threats.

- The process of identifying the main threats and their corresponding security controls is called "Threat Modeling", and will be discussed in more detail shortly.

When to Start Planning For Security?

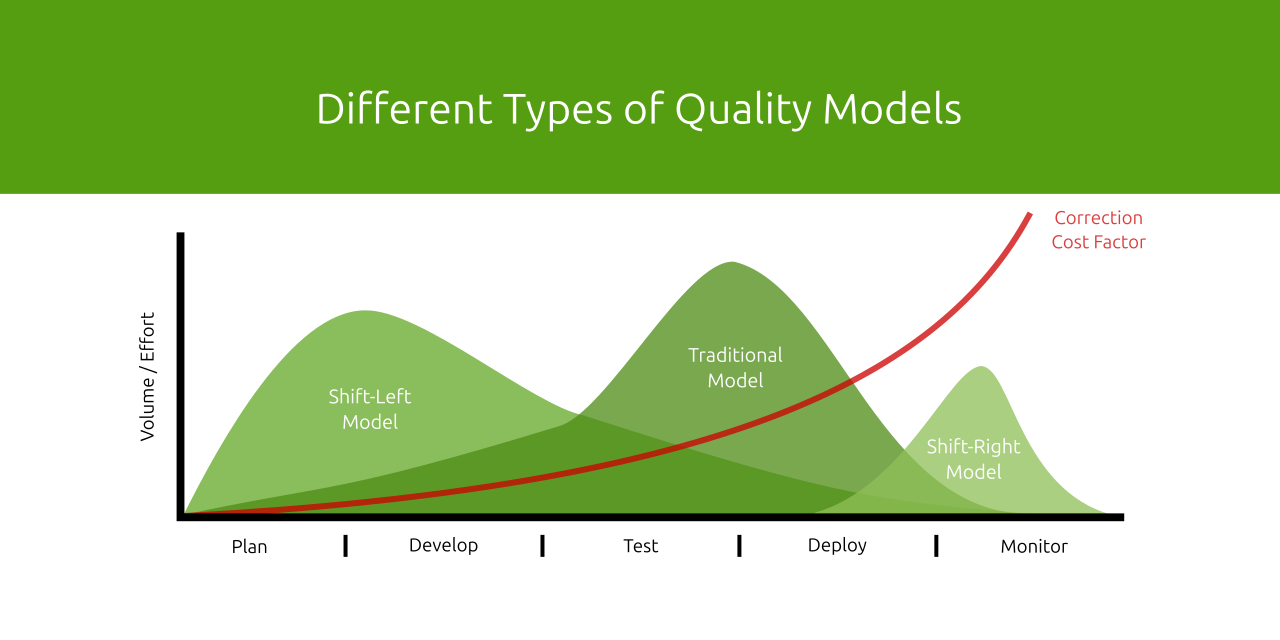

- Ideally, we should start planning for Security during the "Design" phase. The earlier the better, as the later a security issue is discovered, the higher the cost of fixing it becomes, this includes time, effort, lost revenue ... etc.

Threat Modeling

- As mentioned above, "Threat Modeling" is how we define what "Secure" means for our application by defining the main threats, and the corresponding mitigations (security control), and hence is the most critical aspect of "DevSecOps". The goal of a "Threat Model" is to answer the following questions:

- What are we working on? -> The Scope

- What can go wrong? -> The Threats

- What are we going to do about it? -> The Mitigations

- Did we do a good job?

- Threat modeling should be performed during the Design phase once the technical scope of the application is determined, and needs to be done in meetings including stakeholders from the software engineering team, the security team, and any other teams involved in the design (e.g. the platform team if we are using a cloud service).

- The output of Threat modeling is a list of threats and their corresponding mitigations. Example:

Threat: Unauthorized access to our API could lead to customer data exposure or tampering.

Mitigation: We are performing authentication for all requests through the

Authorizationheader, and we are verifying that the authenticated user has access to the resource the API is being used for.

Resources

- Threat Modeling: Designing for Security by Adam Shostack

- Threat Modeling Training Course - Practical DevSecOps

- Threat Modeling Handbook

- Threat model Template

3. Verifying: DevSecOps Processes and Tools

What you need to know

- Once the main threats and their corresponding security controls are identified, we can verify "Security" by adding tests, tools, and/or processes to our DevOps pipeline or as separate scheduled jobs to continuously verify these security controls to ensure they are implemented correctly and that new code or configuration changes don't break them in the future. In other words, one of the outputs of the "Threat Model" should be a continuous testing plan that covers the mitigations of high-impact threats or those that are highly likely to be broken by future code or configuration changes.

- There are some different categories of tools we could choose from to create this testing plan, depending on the mitigations we want to cover.

3.1 Unit and Integration Tests

-

Unit and integration tests is a very useful tool to use to test some of the most important and most recurring category of mitigations, like authentication, authorization, CSRF protection and other mitigations related to business logic. This is mainly because, as mentioned earlier, most security tools (e.g. SAST and DAST) usually miss this category of issues.

-

For example, as an integration test, testing authentication for a Django application.

from django.test import TestCase, Client

class ShareAPIViewTestCase(TestCase):

def setUp(self):

self.client = Client()

def test_missing_session_cookie(self):

"""Test that a 401 response is returned when the session cookie is missing"""

response = self.client.get('/api/share/')

self.assertEqual(response.status_code, 401)

def test_valid_cookie_different_user_file(self):

"""Test that a 403 response is returned when a valid cookie for user 1 is provided and the file_id input

parameter is a valid id of a file owned by a different user"""

# Assuming you have a function or method to generate a valid session for a user

self.client.login(username='user1', password='password1')

# Assuming you have a function or method to create a file owned by a different user

file_id = create_file_for_different_user()

response = self.client.get('/api/share/', {'file_id': file_id})

self.assertEqual(response.status_code, 403) -

You can find more details about writing unit and integration tests in this roadmap:

3.2 SAST (Static application security testing)

-

Another useful category of security tools is SAST, which scans the code for common patterns that could lead to security issues. This is usually useful for identifying dangerous functions such as:

- A raw SQL query defined using user input could lead to SQL injection.

- A command defined using user input could lead to Command injection.

-

For example, the below route has an Open-redirect vulnerability as it passes user input (the

urlquery parameter) to the dangerous functionres.redirect.app.get('/users/:id', (req, res) => {

const userId = Number(req.params.id);

const user = users.find((user) => user.id === userId);

console.log(req.query)

if (!user) {

if (req.query.url) {

res.redirect(req.query.url);

} else {

res.redirect('https://www.example.com');

}

} else {

res.json(user);

}

});A SAST (Semgrep in this example) scan for this produces the following finding:

$ semgrep scan

┌──── ○○○ ────┐

│ Semgrep CLI │

└─────────────┘

[...]

❯❱ javascript.express.security.audit.express-open-redirect.express-open-redirect

The application redirects to a URL specified by user-supplied input `req` that is not validated.

This could redirect users to malicious locations. Consider using an allow-list approach to validate

URLs, or warn users they are being redirected to a third-party website.

Details: https://sg.run/EpoP

29┆ res.redirect(req.query.url); -

However, it is essential to note that SAST tools usually generate a lot of false positives, so it is not recommended to block your CI/CD pipeline on SAST findings, as it could get pretty noisy.

-

Here is the recommended approach:

- A daily full repo SAST scan, with a specific team accountable for triaging and fixing new findings coming out of this scan.

- An incremental PR/MR scan that scans new code for newly introduced findings, and adds the findings as comments to the PR/MR.

- In the CI/CD pipeline, only the block of the findings related to high-impact mitigations is identified in the threat model. E.g., only for rules related to SQL injection findings that have been tested and verified not to generate false positives.

-

Here are some SAST tools to explore:

- Semgrep: Allows writing custom rules, and customizing which rules to run which makes it flexible and easy to adapt to your threat model mitigations. Has a free community edition, but most versions (e.g., cross-file analysis, PR scans, AI Assistant for auto-triage and auto-fix) are in the paid version.

- OpenGrep: An open-source fork of Semgrep's community edition.

- QwietAI: Uses a code property graph to identify vulnerabilities, which makes theoretically makes it able to reduce false positives and identify more complex vulnerabilities (e.g. cross file vulnerabilities).

- Corgea: Besides using AI to triage and fix other tools' SAST findings, it use AI to scan the code which makes it able to identify business logic issues through (BLAST).

-

The following are more resources about SAST:

3.3 DAST (Dynamic application security testing)

- DAST analyzes the application by simulating attacks and observing how the application responds to find potential vulnerabilities. Unlike SAST, this doesn't need access to the code of the application.

- Burp Suite and OWASP ZAP are the most popular DAST tools. However, they don't work well with modern applications (e.g., SPA such as React) and are not optimized to work within CI/CD pipelines, as they are usually time-consuming and noisy.

- There are some more recent DAST tools worth exploring, like Escape and Akto API Security, which are more equipped to handle modern applications and APIs, but generally it is still recommended to run DAST as a separate scheduled job rather than within your CI/CD pipeline.

3.4 IAST (Interactive application security testing)

- IAST is a hybrid approach that tests applications dynamically by simulating attacks like DAST. It also integrates directly with the application's runtime to observe the application's behavior during execution. This allows IAST to produce better findings and add more context to the findings (e.g., the file and line of code where the dangerous function exists).

- Like DAST, it is recommended that you run IAST as a separate scheduled job rather than within your CI/CD pipeline.

- To try IAST, you could start with the free community edition of Contrast Security.

Note that you don't necessarily have to use all types of security scanning tools, such as SAST, DAST, and IAST. Start with the most important mitigations in your threat model and select the tool that gives you the best coverage. If unsure, starting with SAST is usually the easiest path.

3.5 Secret Scanning

Another important threat is hard-coded credentials/secrets in your code, which could expose these credentials and whatever data they have access to. This is especially dangerous in open-source repos. Hence, using a tool that scans your repos for secrets is recommended.

- TruffleHog is an open source tool that can be used for secret scanning, and there are also some paid solutions, such as GitGuardian and Semgrep Secrets.

3.6 SCA (Software Composition Analysis)

- As mentioned earlier, the security of an application doesn't rely only on the application code, but also on all of the underlying layers and abstractions. One of those layers that needs to be secured is the open source packages (npm, pip, maven, etc.) used by the application as direct or indirect dependencies, as vulnerabilities affecting any of these packages could potentially also be used to attack the application using the package.

- Hence, it is recommended to use SCA tools to scan your application to get the list of open source dependencies, their current versions, and any known vulnerabilities that affect these versions.

- For that, we could use tools such as Dependabot or Snyk, but as most SCA tools generate a huge number of alerts, it is recommended to explore tools that perform "Reachability Analysis" such as Coana (Recently acquired by Socket.dev) or Endor Labs.

- Reachability analysis is the automated analysis of the code and its dependencies to determine whether the vulnerable parts of the code (e.g., the vulnerable function) in the package with the known vulnerability are reachable from the application code. This enables us to dismiss 70-90% of the alerts, as in many cases, the vulnerability in the dependency is not reachable. For more details about "Reachability Analysis", you can check this post.

3.7 Container Vulnerability Scanning

- Another layer that also needs to be secured is the container images being used, as similar to open source packages, container image packages (e.g. deb or apk packages) vulnerabilities could affect the application's security.

- A preventive measure would be using distroless container images that don't have unnecessary packages, and are periodically being patched to minimize packages with vulnerabilities, such as Chainguard Images.

- Besides that, there are tools that could be used to scan your container images for vulnerabilities, such as Trivy, Grype, or Docker Scout.

- It is recommended to use these scanners as part of the container image build pipeline, as well as a scheduled job to cover newly discovered vulnerabilities.

3.8 Cloud Configuration Scanning

-

Similarly, if you are deploying your application on a Cloud provider (e.g., AWS, GCP, or Azure), misconfiguring the Cloud Services could affect the security of your application (e.g., making an S3 bucket with sensitive data public could expose this sensitive data).

-

Hence, it is recommended to scan your Cloud Service configuration periodically for misconfigurations, and for that you can use tools such as checkov, cloudsploit, or Scout Suite

3.9 SCM and CI/CD Configuration Scanning

- Another layer that could introduce vulnerabilities is the SCM (e.g., github) or CI/CD tools (e.g., GitHub Actions or CircleCI). e.g.:

- Missing branch protection could allow an attacker to add malicious code to your repo.

- A compromised GitHub Actions package (e.g., the

tj-actions/changed-filescompromise) could expose your production secrets or credentials.

- Hence, it is recommended to scan the configuration of your SCM and CI/CD tools, and for that, you could use tools such as legitify.

You can find a sample service and its threat model, and the steps followed to create the threat model. Under "Step 6 - Create Tests to Continuously Verify Mitigations," you can find some examples of how the threat model was used to create a DevSecOps testing plan.

Summary

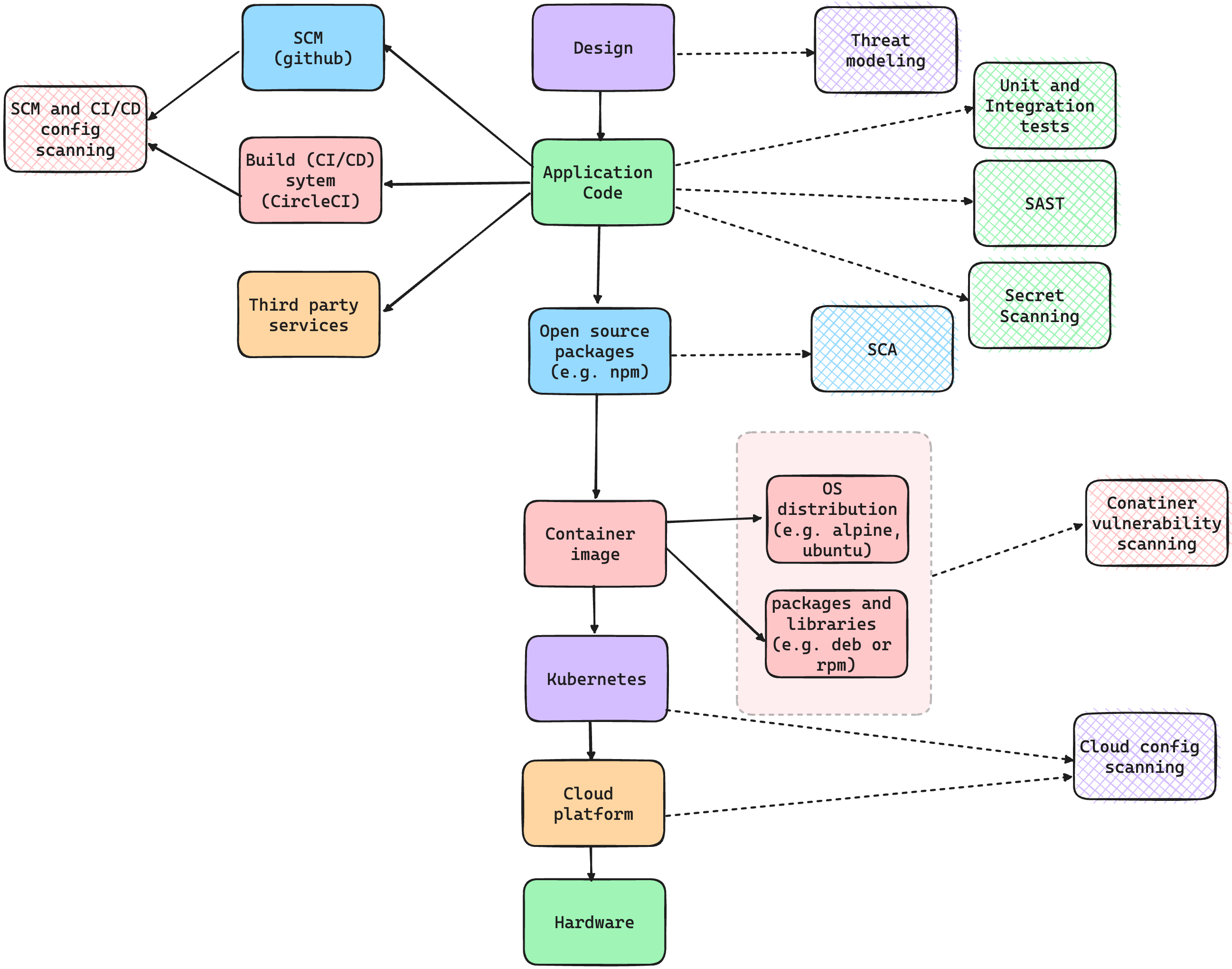

- To recap, the goal of "DevSecOps" is to make security move at the same pace as DevOps, and for this to happen we need to have a clear definition of what our application(s) need to be secure from (the threats), and the controls that we need to implement to protect against them (the mitigations). Then we need to select the right tools and processes to help us verify that these controls are working as expected across all the application layers.

- Finally, here is a diagram that summarizes the DevSecOps tools and processes mentioned above, mapping them to the application's different layers.

Resources

- Certified DevSecOps Professional (CDP) Course: A hands-on DevSecOps Certification Course mainly relying on labs.

- Using Threat Modeling to Create a DevSecOps plan: A talk about using Threat Modeling to create a DevSecOps plan.

- Ultimate DevSecOps library: A library that contains a list of tools and methodologies accompanied with resources.

- Awesome DevSecOps: An authoritative list of awesome DevSecOps tools with the help from community experiments and contributions.

- DevSecOp University: A comprehensive collection of DevSecOps learning resources like books, tutorials, infographics, tools, and much more.